There is nothing worse than churning out code non-stop, only to realize you have an unjustifiable number of bugs and vulnerabilities to fix before release. At Cortex, we are committed to the idea of continuous testing and making security an ongoing process to avoid last-minute chaos and poor-quality deployments.

Teams that value code quality and application security employ various methods to monitor these aspects of the software development life cycle. In this article, we do a deep dive into one of these methods, i.e., static analysis and how you can integrate the practice in your team’s workflow with practical tools.

What is static analysis?

The whole idea of static analysis is examining the source code of the software itself without executing the program. No kind of live testing is carried out during the process; therefore, no light is shed on the actual functionality of the program. It is a method of debugging software right at the source so that any errors that may crop up at that stage of the development life cycle can be dealt with then and there.

Unlike manual code reviews, static analysis is driven by automated tools that simplify and speed up the code analysis process. The code is tested against certain coding guidelines to ensure that it meets certain standards and that the program is running as intended.

The point of doing a static analysis on the code is to look at the information it provides and work with that to improve the program before it is taken to the next stage. Because this kind of analysis is not dynamic and is not testing the code in a live environment, its findings are limited. As a result, there are several bugs or nuances that such analysis is not able to capture. Despite this, there are numerous reasons to use static analysis as it comes in handy in a multitude of situations.

Why is it useful?

If you are choosing to run static analyses on your code, automating the process is a no-brainer. There is a wide range of tools that, depending on your purpose and needs, will do the work for you so that you only need to decide what to do next. If you remain unconvinced, here are a few reasons to regularly do static analyses and use a tool for the job.

Put simply, it will improve the quality of your product. Testing of any kind is important because it tells you what needs improvement. Too often, teams allow bad practices that are seemingly less impactful to pass. They decide to instead focus on shipping out code without prioritizing quality until the last moment. This inevitably slows down the process as opposed to if best practices had been followed from the beginning. By running a static analysis before you execute the code, you establish the practice of identifying such inconsistencies and bugs in the early stages of development instead of waiting until you must address the issues. This saves you time and effort while also encouraging developers to efficiently build high-quality software from the beginning.

Tasks like vulnerability scanning can benefit significantly from a static analysis. The analysis will look at your dependency management lock files and at all the libraries your services depend on to tell you which ones are vulnerable. There is no subjective quality to it, either there is a vulnerability somewhere in the software, or there isn’t.

Similarly, static analysis is useful with regard to well-known patterns such as poor memory management or workflows that can cause buffer overflows. You are either doing the task right or wrong, and the analysis can tell you which one it is. Once an issue is identified, you can go in and do what needs to be done in order to fix it.

Developers often also deal with issues of code complexity. It is easier to write unnecessarily complicated or long code than to be quick and efficient in this regard. Static analysis steps in to identify the places where the code is too complex. Perhaps a function is too long, or the readability score is an 8 out of 10 and it is too complicated. Since these are subjective judgments, they can sometimes be meaningful, while at other times, it is best to disregard them. The static analysis tools will only reflect and communicate these judgments as they are not direct participants in any decision-making process. In cases like these, it is up to the team or the developer to figure out whether they want to take that piece of information into consideration when preparing the code for the next step in the process.

How should you do it?

So, how should you set up static analysis as part of the team’s workflow?

In more objective cases, such as if you have a high severity vulnerability with a library or if you are introducing security risks, go ahead and block the merges, deploys, and releases. Bear in mind that you need to do it as part of every pull request, and not just on the master.

Additionally, it is useful to run the static analysis on a schedule. Let's say you have an old repository. It is stable and working. It is almost done being developed and is currently in production, so you are not touching it. You don't want this repository to start running into issues like security vulnerabilities. To avoid this, put together a schedule where you are running that analysis frequently, and keeping the information up-to-date.

However, be careful not to block anything when the situation is more subjective. Regarding issues of code complexity or logic, for instance, blocking your merges or deploys can do significant damage. If you do so, your team will have to work around these blocks or will find them cumbersome and simply mute them. It is evident, then, that blocking these things is not adding any value.

That being said, even if you are not blocking merges based and releases, you still want to know if you are trending downhill. A good rule of thumb is to get all the relevant information in a single place. This lets you report on it easily as well as understand if things are trending in the wrong direction.

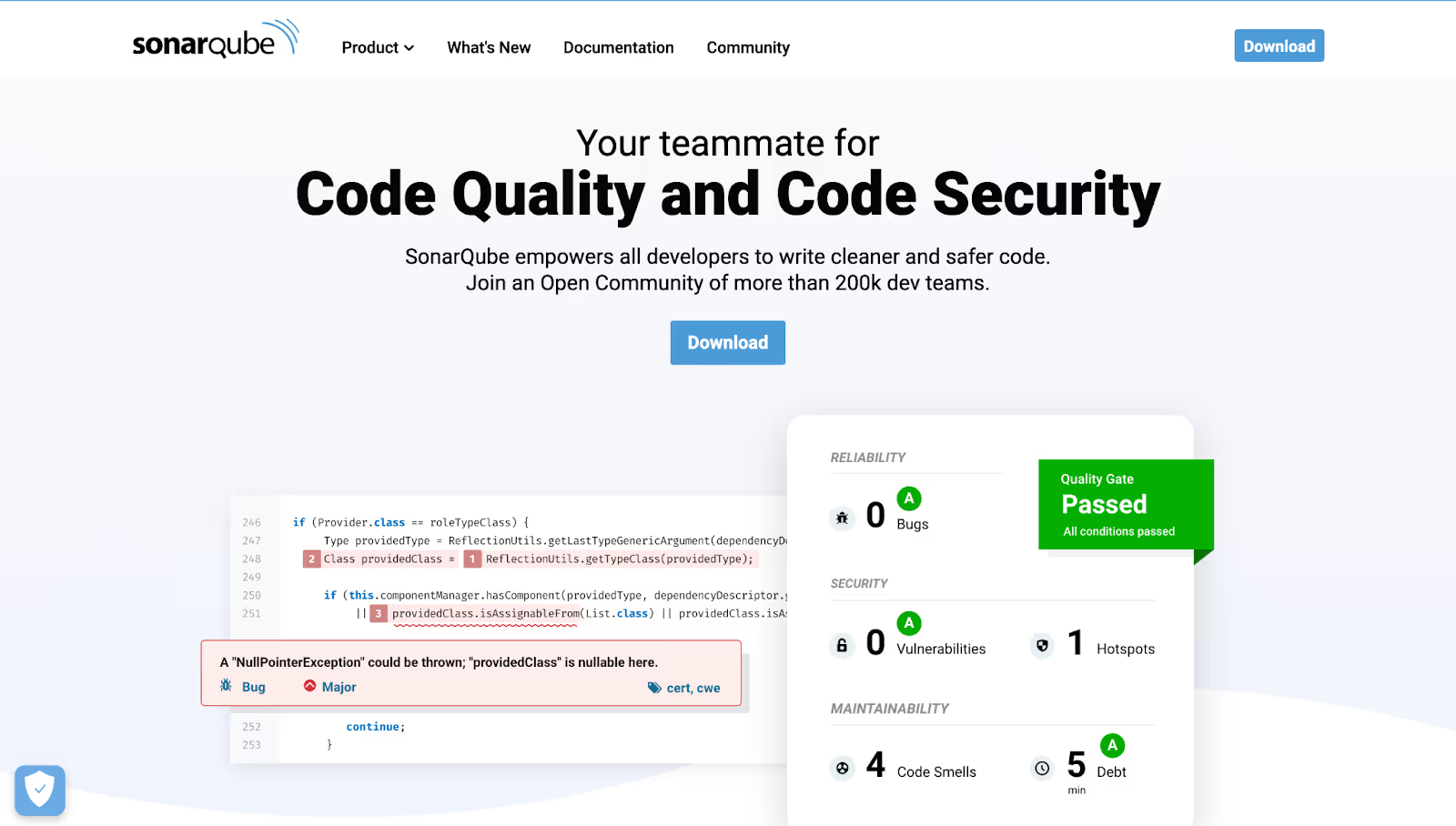

This is where static analysis tools really shine. SonarQube, for example, is a single source of truth. You can push a lot of information into SonarQube and ask how things are progressing and what the areas of risk are. Being able to do this in itself is a considerable accomplishment. For those looking to go one step further, using a tool like Cortex might be more appropriate. It tracks the specific metrics you care about based on individual services and related aspects such as service ownership.

So you are not blocking your team, but are still including it as a holistic measure of health. If your code coverage is continuously dropping over time, it may not make sense to block your release by setting objective rules that end up producing an unintelligent analysis. Say the rule dictates that you must have 80% code coverage, and it will be blocked if you have 79.9%. Instead, you will be better off assessing that particular situation and deciding that it needs work if the code coverage is at 50%. So how do you track that without blocking releases? By tracking it in a single place.

So, using static analysis as a metric around subjective aspects such as code quality and service health is fairly valuable. However, from a more objective or a security standpoint, you usually want to block merges and releases, as well as continue to report on them too.

It is also a good idea to run the analysis on a regular basis and fix the errors or vulnerabilities as soon as possible. There is no point in identifying them if you do not take the appropriate steps to make improvements. Doing this regularly prevents bugs and issues from piling up and delaying the development process. It also allows this practice to become a habit, helping your team to work more efficiently in the long run.

Source: Plerdy

Source: Plerdy

Choosing a tool

Now that we know what the benefits of using a static analysis tool are, let us look at what makes for an effective one. There is no shortage of offerings when it comes to these tools. With the large number of options at your disposal, you might find yourself spending more time than is necessary on choosing a tool that will best work for you.

To facilitate the decision, we have listed some practices to follow and factors to consider when picking out a tool to automate part of your analysis process.

Know your needs

One of the first steps is to identify in what ways you are hoping the static analyses will help you. Are there recurring code quality issues that you are already used to seeing? What kind of insights will your team benefit from? What are the compliance requirements that you need to meet when building the product? For some, the tool must be able to do a good job of checking for compliance with these guidelines. Others may primarily be concerned with code quality.

Although you will not have complete information right off the bat without having first run an analysis, it is good practice to reflect on your team’s current workflows and put some thought into what you are trying to achieve.

Integration with your workflow

Reflection on your present workflow is also necessary to determine how a static analysis tool and practice can be integrated into it. Your team will already be using certain tools and working in specific ways while developing the product. When choosing a static analysis tool, take this into account so that you can incorporate it without running into significant complications. If you have numerous, highly specific analysis requirements, you might prioritize a tool that offers high-quality support for running custom analyses. It also helps to have a relatively stable and streamlined workflow so that the results of the analysis can be considered reliable.

Another important consideration when deciding whether the tool is a good fit is programming language compatibility. This is the case, especially for projects that are written in multiple languages. Although many tools can analyze code written in a wide range of languages, sometimes they fall short in terms of analysis quality. It is, therefore, best to be on the lookout for compatibility and quality when picking a tool.

Finally, tool adoption is essential. If you do not see your team actively using the tool, then it may not be worth investing in. The tool should analyze inconsistencies in the code, not disrupt everyday workflows.

Beware of false positives

It is no secret that developers are sometimes wary of static analysis tools because of the probability of coming across false positives in an analysis. A false positive is cumbersome - it offers no insights, but instead adds to the team’s work as they waste time identifying these and getting rid of them. The team may begin to lose trust in the tool. However, you can identify false positives and mark them as such so that the tool ceases to warn you about those in the future. So by putting in some work in the beginning, you can set up the tool to work more effectively in the long-term.

Quality of coverage

Tool providers will usually list the range of errors, vulnerabilities, and standards that their tools can analyze. While this is valuable, seeing as these need to align with your needs, pay attention to the quality of analysis that the tools offer. Just because a tool can run a static analysis checking for all kinds of vulnerabilities does not necessarily mean it does the job well.

Source: Parasoft

Source: Parasoft

Tools that caught our eye

Each team has its distinct needs and workflows to integrate a static analysis tool. To give you an idea of what your options look like, let’s inspect some of the top players in the game.

SonarQube

One of the most widely-used tools, SonarQube is open-source and provides stellar code quality and security analysis services. Its analyses focus on code complexity and coding standards, in addition to discovering vulnerabilities and duplicated code, among other functionality. It automates these processes effectively and runs continuously, so your developers need not spend hours on these tasks and can instead focus on fixing any vulnerabilities or issues that the tool identifies. Not only that, but SonarQube offers detailed descriptions and explanations in its analyses, making it easier for developers to do their jobs.

It supports 29 programming languages, which makes it an attractive option for teams building multi-language products. However, analysis for C, C++, Swift, and a few other languages is proprietary and only available to users who buy a commercial license. It also comes in the form of plug-ins for IDEs and offers pull request analysis on GitHub, Azure DevOps, and Gitlab. Integrating SonarQube with Cortex is also an option, and can be used, for instance, to up a scorecard to measure operational readiness.

In addition to offering integration with your CI/CD pipeline, it can maintain records and also visualize metrics over time. So, teams need not lose sleep over disrupted workflows and can continue to maintain robust documentation. Also, with over 200,000 teams using SonarQube for their static analysis needs, it has a strong community network, which is a huge bonus.

DeepSource

Trusted by companies like Intel and NASA, DeepSource is an easy-to-use static analysis platform. It captures security risks, bugs, and poorly-written code before you run the program. It is ideal for teams that are starting out and looking to incorporate a simple yet powerful tool with a relatively flat learning curve. Setting it up, for instance, requires no installations or configurations and doesn’t take longer than a few minutes. Additionally, users can track dependencies, relevant metrics, and insights from a centralized dashboard. It offers a free plan for smaller teams, and charges per user thereafter.

Like SonarQube, it runs continuous analyses on pull requests and is compatible with several programming languages, including Python, Javascript, Ruby, and Go. It prides itself on having a vast repository of static analysis rules against which it runs your source code. At the same time, its analyzers are optimized to deliver a false-positive rate of less than 5% and analyze code within 14 seconds on average.

DeepSource goes a step further to allow you to automate certain bug fixes using its Autofix service. This means you can review the bug and ship the fix in one go. It also works with code formatters to automate the formatting process by making a commit with the appropriate change.

Codacy

Codacy is another great offering among static analysis tools. In addition to standard analysis services, it lets users customize rules and establish code patterns in line with their organization’s coding standards. The customization functionality is also useful to get rid of false positives and prevent them from cropping up in the future.

A central dashboard facilitates the tracking of important metrics and key issues, as well as seeing how code quality and security for the product have progressed over time. Users can also monitor code coverage and receive security notifications at the earliest. Codacy also provides suggestions for fixes, which can save developers time when the issues are relatively minor. Another feature we appreciate is the ability to communicate via inline annotations or commit suggestions when pull requests are created.

Codacy is free for open-source teams. Other teams can choose between two paid plans, depending on their size and needs. It offers support for over 40 programming languages.

Veracode

Veracode’s static analysis tool is one of its many scanning, developer enablement, and AppSec governance offerings. It offers fast scans and real-time feedback of your source code to let you fix issues without any delays.

In addition to being able to analyze code across over 100 programming languages and frameworks, Veracode can also integrate with more than forty tools and APIs. It does not, however, compromise on quality, instead offering end-to-end inspection and prioritization of issues based on their false-positivity rates. Users can also easily track the analyses, including security-related and compliance activity. Overall, this makes for a pleasant developer experience.

Coverity

Synopsys is another company with multiple products for software development teams, including its static analysis tool, Coverity. It emphasizes its ability to help developers locate issues and vulnerabilities early in the development life cycle without interrupting their workflows.

For instance, users can easily integrate it into their CI workflows and REST APIs. Coverity also works in tandem with the Code Sight IDE plug-in to give developers real-time alerts as well as recommendations for possible solutions. Its automation also allows it to support and analyze the code for heavier software.

Coverity is available for infrastructures irrespective of whether they are located on-premises or in the cloud. Additionally, it is compatible with 22 languages and more than 70 frameworks.

The tool prioritizes security, allowing users to easily monitor compliance requirements and coding standards with Coverity’s reporting features.

Better sooner than later

Static analysis is a powerful way of monitoring the status and quality of your code in the early stages of development. Establishing it as a long-term practice in your teams is not only beneficial but fairly easy to do, given the levels of accuracy and efficiency static analysis tools can achieve today. Besides improving the quality of your code, running a static analysis is essential for highlighting vulnerabilities and ensuring your product is secure.

Although different tools may work for different teams, it is futile to spend too much time on finding the ‘right’ one. Start by reflecting on what your team’s needs are and what will cause the least amount of disruption in their workflows. Go with the tool that best aligns with your requirements and get started!