You're in a planning meeting when someone asks a simple question. How long does it actually take your team to ship a feature? You've got spreadsheets, Git logs, and Jira exports scattered across three tabs, and you still can't give a confident answer. It's a question you should be able to answer instantly, but the data lives in too many places to stitch together on the fly.

This is the problem engineering intelligence platforms are designed to solve. They aggregate data from Git, Jira, CI/CD pipelines, and incident management tools into unified dashboards that surface DORA metrics, PR cycle times, and AI tool adoption. The market has exploded in recent years with dozens of vendors promising to give you that confident answer about team productivity.

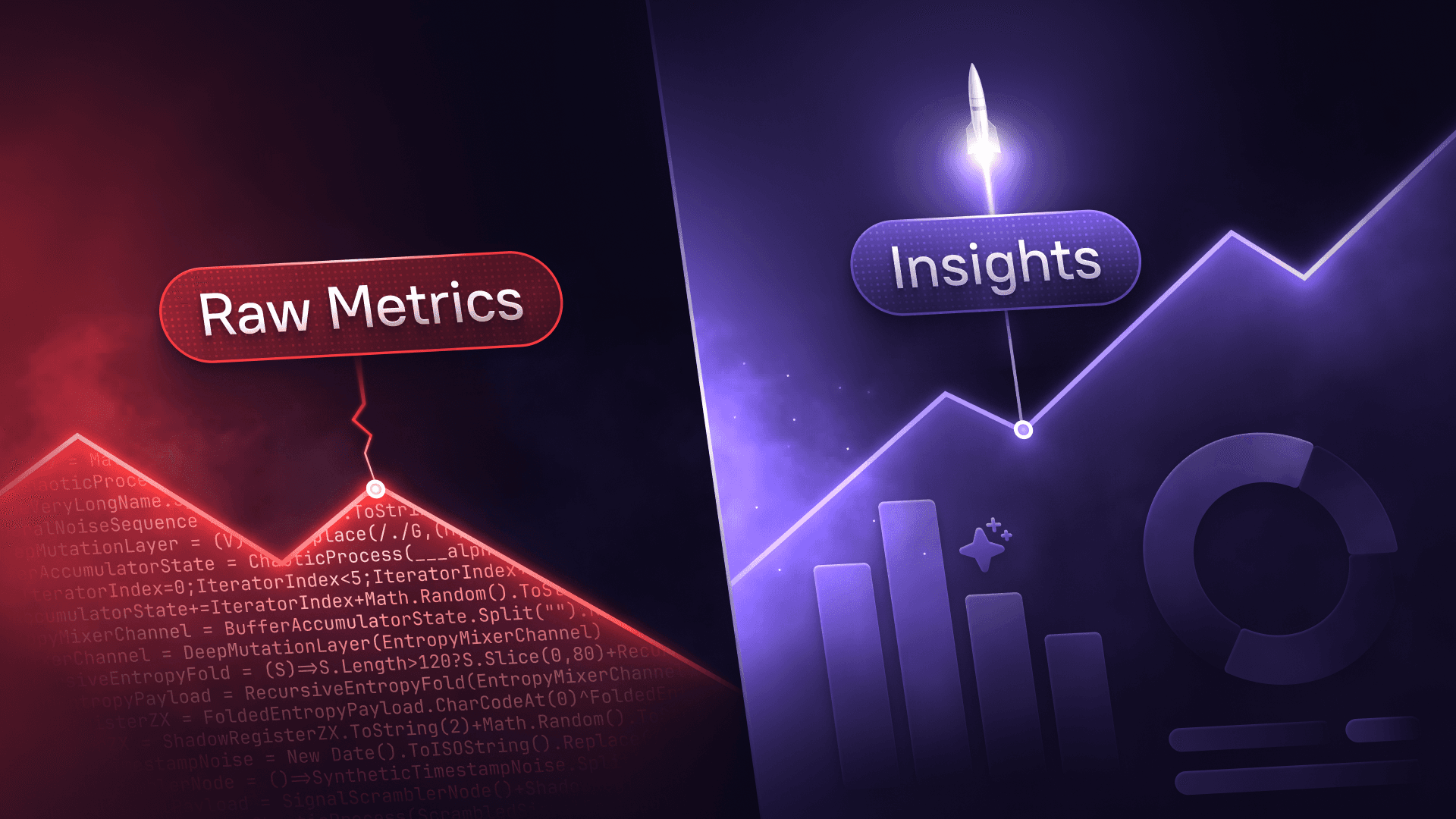

These platforms can identify inefficiencies, justify headcount requests, and track the impact of process changes. But most vendors won't tell you that metrics alone don't drive improvement. You can have the most beautiful dashboard showing elevated cycle times or declining deployment frequency, but unless you can connect those signals to specific services, teams, and action items, you're just staring at numbers. The real value comes from platforms that help you translate insights into concrete improvements.

This guide covers the leading platforms in 2026, what each does well, where they fall short, and which capabilities actually matter when you need to know how long it takes to ship something.

What is an engineering intelligence platform?

Engineering intelligence platforms aggregate data from your development tools (Git, Jira, CI/CD, incident management) to provide visibility into how your engineering organization operates. These platforms track everything from commit frequency and PR review time to deployment success rates and the downstream impact of production incidents.

Most platforms focus on a core set of delivery metrics. DORA metrics (deployment frequency, lead time for changes, change failure rate, time to restore service) have become the industry standard for measuring software delivery performance. Many tools also track developer workflow metrics like PR cycle time, work in progress limits, and code review patterns. Think of these as the vital signs for your engineering organization.

The best platforms help you understand why certain metrics are trending in a particular direction and provide frameworks for improvement. Some include developer surveys to complement quantitative data with sentiment. Others offer workflow automation to reduce toil. The most sophisticated solutions connect metrics to service ownership so you can route improvement initiatives to the right teams.

What to look for when evaluating tools

Before diving into specific vendors, consider what matters most for your organization. Different platforms excel in different areas, and the right choice depends on your team's maturity, size, and specific pain points.

Integration breadth matters more than you think

Your platform needs clean data from Git, Jira, your CI/CD pipeline, and ideally your incident management tool. Check whether vendors support your specific stack before committing. Some platforms require extensive configuration or custom API work to get data flowing correctly. Others provide plug-and-play integrations but may not support niche tools or on-premise systems.

Think about who will actually use this tool

Some platforms target individual contributors with Slack notifications and workflow automation. Others focus on executives who need portfolio-level visibility and board-ready reports. The best platforms serve multiple personas, but most optimize for a primary user. Make sure the UX matches how your team actually works.

Metrics are just the starting point

Any platform can show you a dashboard of DORA metrics. The differentiation comes from what happens next. Can you drill down to see which services or teams are outliers? Can you track initiatives over time to measure whether process changes actually improved performance? Can you connect service ownership to metrics so the right people get alerted when something needs attention?

Free tiers and trials help you test before committing

Several vendors now offer freemium models or generous free trials. Take advantage of these to validate that the platform works with your data and provides insights your team will actually use. Pay attention to how much manual configuration is required and whether the vendor provides implementation support.

With those criteria in mind, here's how the leading platforms stack up.

Top engineering intelligence platforms

Cortex

Cortex takes a different approach than most engineering intelligence vendors. Rather than offering metrics in isolation, Cortex combines engineering analytics with a service catalog that maps your entire software architecture, including ownership, dependencies, and quality standards.

This integration becomes valuable when you want to move from observation to action. When you spot an elevated cycle time or declining deployment frequency, you can immediately see which services are affected, who owns them, and what initiatives are already underway to address similar issues. Cortex also provides Scorecards for tracking improvement initiatives and Workflows for automating common tasks.

The platform includes comprehensive DORA metrics, PR cycle time analysis, code quality tracking, and upcoming AI impact measurement. Cortex integrates with most development tools and offers broad customization for teams with specific workflow requirements. The UX is designed for power users who want deep visibility and control.

Key capabilities

Delivery metrics: Tracks deployment frequency, lead time for changes, change failure rate, and mean time to recovery across services and teams.

Service ownership: Connects every metric to the service catalog so you know exactly which team needs to take action on any outlier.

Initiative tracking: Scorecards let you define improvement goals and measure progress over time, showing whether interventions actually worked.

Workflow automation: Automates repetitive tasks and provides notifications when metrics cross defined thresholds.

Custom Dashboards: Build personalized views using any data point in the catalog (deployments, incidents, or pager load) to tell the story that matters to your stakeholders.

AI impact: Upcoming capabilities to measure adoption and productivity impact of GitHub Copilot and other AI coding assistants.

What Cortex does well

Cortex shines when you need to answer the inevitable question that comes after you identify a problem: Who's fixing it?

The service catalog ties every metric back to a real owner, which sounds simple until you realize how many platforms just show you a red number and leave you to figure out the rest. When cycle time spikes for a particular service, you're not hunting through Slack threads or old wiki pages to find the right team. You can see who owns it, what it depends on, and whether someone's already working on a fix. That's the difference between a metric that gets ignored and one that starts a conversation.

Scorecards are where Cortex earns its keep for larger organizations. Instead of setting improvement goals in a spreadsheet and hoping teams remember to update it, you define what "good" looks like and track which services hit the bar. This becomes particularly valuable for cross-cutting initiatives. When you're trying to improve test coverage or reduce deployment risk across fifty services, you need a way to see who's made progress and who needs a nudge.

The platform also handles complexity without requiring you to become a power user on day one. You can customize dashboards, define your own metrics, and wire up workflows that match how your team actually operates. The integration ecosystem pulls from most of the tools you're already using, which means less time wrestling with data pipelines and more time actually using the insights.

Jellyfish

Jellyfish positions itself as an engineering management platform built for the conversation between engineering and finance. If you spend a lot of time explaining to your CFO why you need more headcount or where your engineering budget actually goes, Jellyfish is designed for that problem.

The platform pulls data from Git, Jira, CI/CD tools, incident management systems, and even finance tools to map engineering effort to business results. Founded in 2017 in Boston with over $100 million in Series C funding, Jellyfish targets mid-to-large organizations where engineering leaders regularly present to the board.

Key capabilities

Financial alignment: Dedicated solutions for finance teams to automate software capitalization, forecast engineering costs, and align R&D spend with business outcomes.

Business alignment: Maps engineering work to business initiatives and revenue impact, helping justify resource allocation decisions.

DORA and workflow metrics: Provides standard delivery metrics plus detailed analysis of PR throughput, cycle time, and code review patterns.

AI impact analysis: Tracks adoption and productivity impact of GitHub Copilot, Cursor, Gemini Code Assist, and Sourcegraph. Includes detailed breakdowns by user, programming language, and usage patterns (power users vs. casual users).

Executive reporting: Dashboards designed for board presentations and finance stakeholder meetings.

What Jellyfish does well

Jellyfish is purpose-built for executive communication. If your primary challenge is explaining engineering investment to business stakeholders, the reporting and visualization tools are designed for that audience. The AI impact analysis includes comparisons across different coding assistants, which can be useful during renewal discussions.

Where Jellyfish could improve

Jellyfish lacks a robust service catalog, which creates a gap when you need to connect metrics to specific owners. You can see that cycle time is up, but figuring out which team should address it requires work outside the platform. The enterprise focus also means smaller teams will likely find the pricing out of reach, and there's no free tier to test before committing to a sales conversation.

LinearB

LinearB focuses on team-level DevOps metrics and workflow automation. The headline feature is WorkerB, a Slack bot that nudges developers about stale PRs and pending reviews. If your biggest problem is PRs sitting in review purgatory, that automation can help.

The platform offers a freemium model, which makes it accessible for teams that want to start tracking metrics without budget approval. Founded in 2018 in Israel with $70M in Series B funding, LinearB targets developers and team leads at mid-market companies.

Key capabilities

DORA metrics and PR analytics: Standard delivery metrics plus detailed visibility into PR cycle time, review bottlenecks, and merge patterns.

WorkerB automation: Slack bot that automates PR reminders, status updates, and other workflow tasks to reduce team coordination overhead.

AI adoption tracking: Basic visibility into GitHub Copilot usage.

Modern UX: LinearB is known for having one of the more user-friendly interfaces in the category.

What LinearB does well

LinearB is easy to get started with. The freemium model means you can connect your repos and start seeing data without talking to sales. The WorkerB Slack bot does reduce some coordination overhead, and the UX is clean enough that developers might actually look at the dashboards.

Where LinearB could improve

LinearB stops at visibility. There's no service catalog, so connecting metrics to ownership requires manual work. There's no initiative tracking, so you can't easily measure whether the process changes you made actually improved anything. Code quality metrics are absent, and some teams find the Jira integration less robust than they need. It's a good starting point, but teams often outgrow it as their needs mature.

Swarmia

Swarmia takes a team-habits approach to engineering intelligence. The core idea is that you define "working agreements" (norms around PR size, review time, WIP limits) and the platform tracks whether teams are sticking to them.

Founded in 2019 in Finland with $11M in Series A funding, Swarmia offers a free tier and positions itself around transparency and team autonomy rather than top-down measurement.

Key capabilities

Working agreements: Framework for teams to establish norms around PR size, review time, and work-in-progress limits.

DORA metrics and PR workflow: Standard delivery metrics with detailed analysis of PR patterns and review effectiveness.

AI usage tracking: Monitors adoption for GitHub Copilot and Cursor, with some visibility into AI activity alongside productivity metrics.

Initiative tracking: Basic support for tracking improvement initiatives over time.

What Swarmia does well

The working agreements concept is genuinely interesting. Rather than just showing you metrics, it helps teams define what "good" looks like and tracks whether they're hitting those targets. The UI is clean, and the free tier makes it easy to experiment without commitment.

Where Swarmia could improve

Swarmia is narrowly focused. While AI tracking provides some context alongside productivity metrics, the depth of analysis is limited compared to platforms with dedicated AI impact modules. There's no public API for custom integrations or data export. Service catalog and ownership capabilities are minimal, which means the platform can tell you a metric is off but can't help you figure out who should fix it. It works for teams that want lightweight habit tracking, but it won't scale to organization-wide visibility.

DX

DX is built by researchers behind the DORA and SPACE frameworks, which gives it credibility in academic circles. The pitch is combining quantitative delivery metrics with developer sentiment surveys for a fuller picture of engineering effectiveness.

Founded in 2021, DX takes an academic approach. The platform offers extensive customization and complex reporting, which appeals to teams that want research-grade rigor in their measurement.

Key capabilities

Research-backed frameworks: Built on DORA and SPACE methodologies by the teams that developed these frameworks.

Quantitative and qualitative data: Integrates developer surveys directly into dashboards alongside delivery metrics.

Flexible analytics: Deep customization options for teams with specific reporting needs.

AI adoption visibility: Tracks AI tool usage alongside other productivity metrics.

Benchmarking: Compare your metrics against industry benchmarks to understand how your team performs relative to peers.

What DX does well

If you care deeply about measurement validity and want frameworks backed by peer-reviewed research, DX delivers that. The developer survey integration is useful for understanding sentiment alongside delivery metrics, and the platform offers more analytical flexibility than most competitors.

Where DX could improve

DX's academic rigor comes with academic complexity. The platform requires significant setup, and the UX assumes you want to configure everything yourself. Teams looking for quick, opinionated defaults will find it overwhelming. There's no workflow automation, so you can identify problems but can't easily act on them within the platform. Service catalog functionality is basic, which means ownership mapping happens outside the tool.

Uplevel

Uplevel focuses on developer wellbeing rather than delivery metrics. The pitch is that sustainable productivity requires healthy developers, so the platform monitors burnout signals, work patterns, and collaboration health.

Founded in 2018 in Seattle with $34M in funding, Uplevel targets organizations where engineering leadership partners closely with HR on retention and developer experience initiatives.

Key capabilities

Burnout detection: Analyzes work patterns to identify developers at risk of burnout based on after-hours activity, meeting load, and work intensity.

PR velocity and feature work time: Tracks how much time developers spend on feature development vs. meetings, email, and other non-coding activities.

Work pattern analysis: Visibility into how developers spend their time, with some attention to AI tool usage in the context of broader productivity patterns.

People metrics: Dashboard designed for HR teams and engineering leaders focused on team health and retention.

What Uplevel does well

Uplevel addresses a real concern that most platforms ignore. If developer retention is a priority and you want early warning signs about burnout, the work pattern analysis can surface issues before they become resignations. It's useful for managers who want to have informed conversations about workload.

Where Uplevel could improve

Uplevel isn't really an engineering intelligence platform in the traditional sense. DORA metrics are partial, code quality tracking is absent, and there's no service catalog or ownership mapping. You won't use this to understand delivery performance or drive improvement initiatives. It's a narrowly focused tool that might complement a broader platform, but it won't replace one.

Code Climate

Code Climate is one of the older players in this space, founded in 2011 in New York City with $66M in Series C funding. The platform combines engineering metrics with professional services, targeting large enterprises that want consultative support alongside their tooling.

Code Climate's heritage is in code quality analysis, and that remains its strongest capability.

Key capabilities

Code quality metrics: Deep analysis of code complexity, maintainability, test coverage, and technical debt.

DORA and delivery metrics: Standard delivery performance metrics plus PR workflow analysis.

AI-assisted reviews: Some integration with AI tools for code review automation, though not a dedicated AI impact measurement module.

Enterprise-grade security: On-premise connectors and support for air-gapped environments.

Professional services: Code Climate offers consulting to help enterprises interpret data and design improvement programs.

What Code Climate does well

Code quality analysis is where Code Climate has depth. Technical debt tracking, maintainability scores, and test coverage analysis are mature capabilities. The enterprise focus means support for on-premise and air-gapped environments, which matters for regulated industries.

Where Code Climate could improve

Code Climate lacks a service catalog and ownership mapping, so the same gap exists here. You can see code quality issues, but connecting them to the teams responsible requires work outside the platform. Custom reporting seems to require professional services engagement, which slows things down if you want self-service analytics. The enterprise pricing and heavyweight approach also puts it out of reach for smaller organizations.

Faros AI

Faros AI takes a graph-based approach to engineering data, representing your development tools as a knowledge graph that you can query for relationships and dependencies. The platform was founded by former Salesforce Einstein team members and positions itself as AI-native.

Founded in 2020 in San Francisco with $29M in Series A funding, Faros targets data-sophisticated organizations that want flexibility over opinionated defaults.

Key capabilities

Graph-based data model: Represents engineering data as a knowledge graph, making it easier to query relationships and track dependencies.

Comprehensive integrations: Broad connector ecosystem for ingesting data from development, incident, project management, and business tools.

AI impact measurement: Tracks GitHub Copilot adoption and productivity impact with AI-powered summary insights.

Open source offering: Faros provides an open source community edition for teams that want to self-host.

ROI calculation: Measures the dollar impact of engineering investments and AI tools.

What Faros AI does well

The graph-based architecture is technically interesting and provides powerful querying once you invest in setup. The open source edition lets teams experiment before committing to the commercial platform, which is a nice option for organizations that want to validate the approach first.

Where Faros AI could improve

Faros requires significant technical investment to use effectively. The flexible data model means you're building your own data pipelines and configurations rather than getting opinionated defaults out of the box. The UX assumes a high degree of technical comfort, so this isn't a platform you hand to a new engineering manager and expect them to find value immediately. There's also no workflow automation, which means the gap between insight and action remains your problem to solve.

Other platforms worth considering

Beyond these primary players, a few other tools are worth knowing about depending on your specific situation.

Pluralsight Flow (formerly GitPrime) bundles engineering metrics into Pluralsight's skills platform. It's a functional option if you're already heavily invested in their training ecosystem, but the interface and feature set feel a step behind the dedicated platforms that have emerged recently.

Allstacks is interesting if your main headache is project estimation. It uses historical data to forecast delivery dates, which can be helpful for teams struggling with predictability, but its focus is narrower than the broader intelligence platforms.

Hatica plays in the same "developer wellbeing" sandbox as Uplevel. It's a newer entrant with a decent interface, but it's still maturing relative to the established players. Worth a look if burnout prevention is your only priority.

Waydev doubles down on Git-based metrics with a heavy focus on individual contributor productivity. It works fine for smaller teams who just want the numbers, but it lacks the enterprise-grade governance and integration depth of the category leaders.

Apache DevLake is the open source option for teams who want to build it themselves. You get complete control and zero vendor lock-in, but you pay for it in maintenance hours. Great for strong platform engineering teams, but it can be a distraction for everyone else.

Span is the new kid on the block, positioning itself as the "AI-native" alternative. It claims to use LLMs to clean up messy data without manual hygiene and detect AI-generated code in production. It’s a compelling vision for forward-thinking teams, but as a seed-stage startup, it lacks the proven enterprise track record of the market leaders.

Many organizations also build in-house solutions using Tableau or similar BI tools connected to Jira and Git exports. This approach provides complete control but requires ongoing engineering investment to maintain data pipelines and dashboards. Custom solutions rarely match the depth of purpose-built engineering intelligence platforms.

Metrics are only useful when they drive change

Choosing the right platform ultimately comes down to whether the tool will actually help you get better.

Engineering intelligence platforms have become table stakes for modern software organizations. But the most beautiful DORA dashboard in the world is useless if it just hangs on a monitor that nobody looks at. The real value lies in using measurement to drive movement.

As you evaluate these tools, look for the capabilities that bridge the gap between "knowing" and "doing":

Does it connect data to ownership? You shouldn't have to hunt down who owns a service when a metric goes red. The platform should tell you.

Does it track improvement? Dashboards show you the present. You need tools (like Scorecards or initiative tracking) that show you whether your interventions are actually changing the future.

Does it automate the fix? If the platform can nudge a developer about a stale PR or automate a cleanup task, it's saving you time, not just reporting on how you spent it.

Start with your primary pain point, whether that's justifying headcount to finance, reducing review cycles, or preventing burnout. Then pick the tool that solves that specific problem. But remember that the goal isn't just to build a better dashboard. It's to build a better engineering organization. The right platform is the one that helps you do that.

Book a demo with Cortex today to see how we turn metrics into meaningful engineering improvement.